public:t-720-atai:atai-21:methodologies

Table of Contents

T-720-ATAI-2021 Main

Links to Lecture Notes

UNDERSTANDING: Methodologies

Methodology

What It a Methodology?

| What it is | The methods - tools and techniques - we use to study a phenomenon. |

| Examples | - Comparative experiments (for the answers we want Nature to ultimately give). - Telescopes (for things far away). - Microscopes (for all things smaller than the human eye can see unaided). - Simulations (for complex interconnected systems that are hard to untangle). |

| Why it Matters | Methodology directly determines our progress when studying a phenomenon – what we do with respect to that phenomenon to figure it out. Methodology affects how we think about a phenomenon, including our solutions, expectations, and imagination. Methodology determines the possible scope of outcomes. Methodology directly influences the shape of our solution - our answers to scientific questions. Methodology directly determines the speed with which we can make progress when studying a phenomenon. Methodology is therefore a primary determinant of scientific progress. |

| The main AI methodology | AI never really had a proper methodology discussion as part of its mainstream scientific discourse. Only 2 or 3 approaches to AI can be properly called 'methodologies': BDI (belief, desire, intention), subsumption, decision theory. As a result AI inherited the run of the mill CS methodology/ies by default. |

| Constructionist AI | Methods used to build AI systems by hand. |

| Constructivist AI | Methods aimed at creating AI systems that autonomously generate, manage, and use their knowledge. |

What Are Methodologies Good For?

| Applying a Methodology | results in a family of architectures: The methodology “allows” (“sets the stage”) for what should and may be included when we design our architecture. The methodology is the “tool for thinking” about a design space. (Contrast with requirements, which describe the goals and constraints (negative goals)). |

| Following a Methodology | results in a particular architecture. |

| CAIM Relies on Models | CAIM takes Conant & Ashby's proof (that every good controller of a system is a model of that system - the Good X Theorem) seriously, putting models at its center This stance was prevalent in the early days of AI (first two decades) but fell into disfavor due to behaviorism (in psychology and AI). |

| Example | The Auto-Catalytic Endogenous Reflective Architecture - AERA - is the only architecture to result directly from the application of CAIM. It is model-based and model-driven (in an even-driven way: The models left-hand terms are matched to situations to determine their relevance at any point in time, when they match their right-hand term is injected into memory - more on this below). |

| In Other Words | AERA Models are a way to represent knowledge. But what are models, really, and what might they look like in this context? |

Standard Methodologies

| Reductionism | The method of isolating parts of a complex phenomenon or system in order to simplify and speed up our understanding of it. In science, when you want to make a new problem tractable, you reduce it until it's small enough to be addressable by available methods. Most of the time this reduction proceeds in light of currently available methods and tools. Here we can call this “good old fashioned run-of-the-mill reductionism”. An enormous number of problems in science have been successfully addressed through this approach. See Reductionism on Wikipedia. |

| Does Reductionism Always Work The Same Way? | In short, no. For phenomena where current tools and methods do not suffice, new tools and methods must be developed. (GMI may very well be just such a phenomenon.) |

| Occam's Razor | A key principle of reductionism. When faced with two alternative explanations that both explain a phenomenon equally well, choose the simpler explanation. See also Occam's Razor. |

| How Should Occam's Razor Cut? | How the principles of Occam's Razor and reductionism are used must be based on the phenomenon under study. Using a telescope to study electricity or voltmeter to study faraway stars may not lead to quick progress. Going wild with Occam's Razor on your subject matter will lead to a bloody mess: Like any powerful tool, its careful application is key to producing beneficial results. |

Minds & Methodologies

Minds: Heterogenous Large Densely-Coupled Systems

| HeLDs | Heterogeneous, large, densely-coupled systems. |

| So You Want To Create Human-Level AI? | If we want to make smarter machines we should begin by isolating the requirements. Requirements for AGI were already covered - that's a long list, as already discussed (but certainly not impossible!). Are there other concerns? Yes: We need to pick an appropriate methodology for this task. |

| Mind: What Kind of System? | Mind contains a large number of processes (large size), of a wide variety implementing a variety of complex functions (heterogeneity), that work closely together in an integrated manner to implement global emergence (dense coupling); it is a heterogeneous large densely coupled system - a HeLD. |

| HeLDs | HeLDs are found everywhere in nature: Ecosystem, forests, societies, traffic, economies, and yes - minds. In fact, minds are a special kind of self-governed HeLD. |

| How to Study HeLDs | HeLDs require special consideration of methodology - good old fashioned run-of-the-mill reductionism may not suffice. |

How to Study HeLDs Scientifically

Architectural Principles of AGI Systems

| Self-Construction | It is assumed that a system must amass the vast majority of its knowledge autonomously. This is partly due to the fact that it is (practically) impossible for any human or team(s) of humans to construct by hand the knowledge needed for an AGI system, and even if this were possible it would still leave unanswered the question of how the system will acquire knowledge of truly novel things, which we consider a fundamental requirement for a system to be called an AGI system. |

| Semiotic Opaqueness | No communication between two agents / components in a system can take place unless they share a common language, or encoding-decoding principles. Without this they are semantically opaque to each other. Without communication, no coordination can take place. |

| Systems Engineering | Due to the complexity of building a large system (say, an airplane), a clear and concise bookkeeping of each part, and which parts it interacts with, must be kept so as to ensure the holistic operation of the resulting system. In a (cognitively) growing system in a dynamic world, where the system is auto-generating models of the phenomena that it sees, each which must be tightly integrated yet easily manipulatable and clearly separable, the system must itself ensure the semiotic transparency of its constituents parts. This can only be achieved by automatic mechanisms residing in the system itself, it cannot be ensured manually by a human engineer, or even a large team of them. |

| Self-Modeling | To enable cognitive growth, in which the cognitive functions themselves improve with training, can only be supported by a self-modifying mechanism based on self-modeling. If there is no model of self there can be no targeted improvement of existing mechanisms. |

| Self-Programming | The system must be able to invent, inspect, compare, integrate, and evaluate architectural structures, in part or in whole. |

| Pan-Architectural Pattern Matching | To enable autonomous holistic integration the architecture must be capable of comparing (copies of) itself to parts of itself, in part or in whole, whether the comparison is contrasting structure, the effects of time, or some other aspect or characteristics of the architecture. To decide, for instance, if a new attention mechanism is better than the old one, various forms of comparison must be possible. |

| The “Golden Screw” | An architecture meeting all of the above principles is not likely to be “based on a key principle” or even two – it is very likely to involve a whole set of new and fundamentally foreign principles that make their realization possible! |

Current Methodologies: ConstructiONist

| Constructionist Methods | A constructionist methodology requires an intelligent designer that manually (or via scripts) arranges selected components that together makes up a system of parts (i.e. architecture) that can act in particular ways. Examples: automobiles, telephone networks, computers, operating systems, the Internet, mobile phones, apps, etc. |

|

| Traditional CS Software Development Methods | On the theoretical side, the majority of mathematical methodologies are of the constructionist kind (with some applied math for natural sciences counting as exceptions). On the practical side, programs and manual invention and implementation of algorithms are all largely hand-coded. Systems creation in CS is “co-owned” by the field of engineering. All programming languages designed under the assumption that they will be used by a human-level programmer. |

|

| BDI: Belief, Desire, Intention | BDI can hardly be called a “methodology” and is more of a framework for inspiration. Picking three terms out of psychology, BDI methods emphasize goals (desire), plans (intention) and revisable knowledge (beliefs), all of which are good and fine. Methodologically speaking, however, none of the basic features of a true scientific methodology (algorithms, systems engineering principles, or strategies) are to be found in papers on this approach. | |

| Subsumption Architecture | This is perhaps the best known AI-specific methodology worthy of being categorized as a 'methodology'. Presented as an “architecture” originally, it is more of an approach that results in architectures where subsumption operating under particular principles are a major organizational feature. | |

| Why it's important | Virtually all methodologies we have for creating software are methodologies of the constructionist kind. Unfortunately, few methodologies step outside of that frame. | |

Key Limitations of Constructionist Methodologies

| Static | System components that are fairly static. Manual construction limits the complexity that can be built into each component. |

| Size | The sheer number of components that can form a single architecture is limited by what a designer or team can handle. |

| Scaling | The components and their interconnections in the architecture are managed by algorithms that are hand-crafted themselves, and thus also of limited flexibility. |

| Result | Together these three problems remove hopes of autonomous architectural adaptation and system growth. |

| Conclusion | In the context of artificial intelligences that can handle highly novel Tasks, Problems, Situations, Environments and Worlds, no constructionist methodology will suffice. |

| Key Problem | Reliance on hand-coding using programming methods requiring human-level intelligence. So the system cannot program itself. Another way to say it: Strong requirement of an outside designer. |

| Contrast with | Constructivist AI |

ConstructiVist AI Methodology (CAIM)

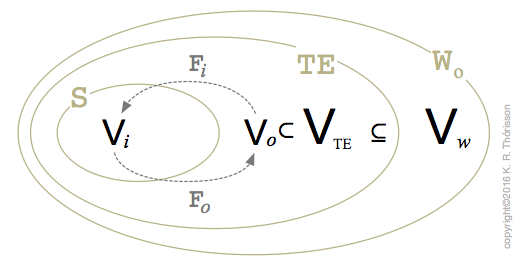

| What it is | A term for labeling a methodology for AGI based on two main assumptions: (1) The way knowledge is acquired by systems with general intelligence requires the automatic integration, management, and revision of data in a way that infuses meaning into information structures, and (2) constructionist approaches do not sufficiently address this, and other issues of key importance for systems with high levels of general intelligence and existential autonomy. |

|

| Why We Need It | Most AI methodology to date has automatically inherited all standard software methodological principles. This approach assumes that software architectures are hand-coded and that (the majority of) the system's knowledge and skills is hand-fed to it. In sharp contrast, CAIM assumes that the system acquires the vast majority of its knowledge on its own (except for a small seed) and manages its own GROWTH on its own. Also, it may change its own architecture over time, due to experience and learning. | |

| Why it's important | It is the first and only attempt so far at explicitly proposing an alternative to current methodologies and prevailing paradigm, used throughout AI and computer science. | |

| What it's good for | Replacing present methods in AI, by and large, as these will not suffice for addressing the full scope of the phenomenon of intelligence, as seen in nature. | |

| What It Must Do | We are looking for more than a linear increase in the power of our systems to operate reliably, and in a variety of (unforeseen, novel) circumstances. The methodology should help meet that requirement. | |

| Basic tenet | That an AGI must be able to handle new Problems in new Task-Environments, and to do so it must be able to create new knowledge with new Goals (and sub-goals), and to do so their architecture must support automatic generation of meaning, and that constructionist methodologies do not support the creation of such system architectures. | |

| Roots | Piaget | proposed the constructivist view of human knowledge acquisition, which states (roughly speaking) that a cognitive agent (i.e. humans) generate their own knowledge through experience. |

| von Glasersfeld | “…‘empirical teleology’ … is based on the empirical fact that human subjects abstract ‘efficient’ causal connections from their experience and formulate them as rules which can be projected into the future.” REF CAIM was developed in tandem with this architecture/architectural blueprint. |

|

| Architectures built using CAIM | AERA | Autocatalytic, Endogenous, Reflective Architecture REF Built before CAIM emerged, but based on many of the assumptions consolidated in CAIM. |

| NARS | Non-Axiomatic Reasoning System REF “If the existing domain-specific AI techniques are seen as tools, each of which is designed to solve a special problem, then to get a general-purpose intelligent system, it is not enough to put these tools into a toolbox. What we need here is a hand. To build an integrated system that is self-consistent, it is crucial to build the system around a general and flexible core, as the hand that uses the tools [assuming] different forms and shapes.” – P. Wang, 2004 |

|

| Limitations | As a young methodology very little hard data is available to its effectiveness. What does exist, however, is more promising than constructionist methodologies for achieving AGI. | |

Architectural Principles of a CAIM-Developed System (What CAIM Targets)

| Self-Construction | It is assumed that a system must amass the vast majority of its knowledge autonomously. This is partly due to the fact that it is (practically) impossible for any human or team(s) of humans to construct by hand the knowledge needed for an AGI system, and even if this were possible it would still leave unanswered the question of how the system will acquire knowledge of truly novel things, which we consider a fundamental requirement for a system to be called an AGI system. |

| Baby Machines | To some extent an AGI capable of growing throughout its lifetime will be what may be called a “baby machine”, because relative to later stages in life, such a machine will initially seem “baby like”. While the mechanisms constituting an autonomous learning baby machine may not be complex compared to a “fully grown” cognitive system, they are nevetheless likely to result in what will seem large in comparison to the AI systems built today, though this perceived size may stem from the complexity of the mechanisms and their interactions, rather than the sheer number of lines of code. |

| Semiotic Opaqueness | No communication between two agents / components in a system can take place unless they share a common language, or encoding-decoding principles. Without this they are semantically opaque to each other. Without communication, no coordination can take place. |

| Systems Engineering | Due to the complexity of building a large system (picture, e.g. an airplane), a clear and concise bookkeeping of each part, and which parts it interacts with, must be kept so as to ensure the holistic operation of the resulting system. In a (cognitively) growing system in a dynamic world, where the system is auto-generating models of the phenomena that it sees, each which must be tightly integrated yet easily manipulatable and clearly separable, the system must itself ensure the semiotic transparency of its constituents parts. This can only be achieved by automatic mechanisms residing in the system itself, it cannot be ensured manually by a human engineer, or even a large team of them. |

| Self-Modeling | To enable cognitive growth, in which the cognitive functions themselves improve with training, can only be supported by a self-modifying mechanism based on self-modeling. If there is no model of self there can be no targeted improvement of existing mechanisms. |

| Self-Programming | The system must be able to invent, inspect, compare, integrate, and evaluate architectural structures, in part or in whole. |

| Pan-Architectural Pattern Matching | To enable autonomous holistic integration the architecture must be capable of comparing (copies of) itself to parts of itself, in part or in whole, whether the comparison is contrasting structure, the effects of time, or some other aspect or characteristics of the architecture. To decide, for instance, if a new attention mechanism is better than the old one, various forms of comparison must be possible. |

| The “Golden Screw” | An architecture meeting all of the above principles is not likely to be “based on a key principle” or even two – it is very likely to involve a whole set of new and fundamentally foreign principles that make their realization possible! |

Example of CAIM in Action: AERA Models

How AERA Learns

| In a Nutshell | AERA does not only perform things it knows, it can learn new things. And when it has learned new things it can yet again learn more new things. And any of those new things can be novel things. And those novel things can be fairly different as well as highly similar to what it already knows; an AERA agent can leverage this, to implement what we have called cumulative learning. Learning a number of diverse novel things requires something over and beyond what is available through the traditional learning methods: Hypothesis generation through analogy. |

| Hypothesis Generation | To deal with new phenomena it creates hypotheses about it - which variables matter, how these are related, how they respond to actions, etc. How these hypotheses are created: 1. Based on correlations between measurements taken in the context of the phenomenon. 2. How similar parts of the phenomenon are to other known phenomena. 'Similarity' is another word for “analogy”. |

| Analogy | Analogy is the systematic comparison of two things, where some parts of those things are ignored while others are rated on a scale as to how similar they are. |

| Model Creation | AERA creates models that capture the relations between variables and other models, esp. causal relations. This makes AERA models very effective for 1. Generating predictions. 2. Creating plans. 3. Explaining how 'things hang together', and 4. Re-creating systems. |

General Form of AERA Models

Model Acquisition Function in AERA

Model Generation & Evaluation

AERA Demo

| TV Interview | In the style of a TV interview, the agent S1 watched two humans engaged in a “TV-style” interview about the recycling of six everyday objects made out of various materials. The results are recorded in a set of three videos: Human-human interaction (what S1 observes and learns from) Human-S1 interaction (S1 interviewing a human) S1-Human Interaction (S1 being interviewed by a human) |

| Data | S1 received realtime timestamped data from the 3D movement of the humans (digitized via appropriate tracking methods at 20 Hz), words generated by a speech recognizer, and prosody (fundamental pitch of voice at 60 Hz, along with timestamped starts and stops). |

| Seed | The seed consisted of a handful of top-level goals for each agent in the interview (interviewer and interviewee), and a small knowledge base about entities in the scene. |

| What Was Given | * actions: grab, release, point-at, look-at (defined as event types constrained by geometric relationships) * stopping the interview clock ends the session * objects: glass-bottle, plastic-bottle, cardboard-box, wodden-cube, newspaper, wooden-cube * objects have properties (e.g. made-of) * interviewee-role * interviewer-role * Model for interviewer * top-level goal of interviewer: prompt interviewee to communicate * in interruption case: an imposed interview duration time limit * Models for interviewee * top-level goal of interviewee: to communicate * never communicate unless prompted * communicate about properties of objects being asked about, for as long as there still are properties available * don’t communicate about properties that have already been mentioned |

| What Had To Be Learned | GENERAL INTERVIEW PRINCIPLES * word order in sentences (with no a-priori grammar) * disambiguation via co-verbal deictic references * role of interviewer and interviewee * interview involves serialization of joint actions (a series of Qs and As by each participant) MULTIMODAL COORDINATION & JOINT ACTION * take turns speaking * co-verbal deictic reference * manipulation as deictic reference * looking as deictic reference * pointing as deictic reference INTERVIEWER * to ask a series of questions, not repeating questions about objects already addressed * “thank you” stops the interview clock * interruption condition: using “hold on, let’s go to the next question” can be used to keep interview within time limits INTERVIEWEE * what to answer based on what is asked * an object property is not spoken of if it is not asked for * a silence from the interviewer means “go on” * a nod from the interviewer means “go on” |

| Result | After having observed two humans interact in a simulated TV interview for some time, the AERA agent S1 takes the role of interviewee, continuing the interview in precisely the same fasion as before, answering the questions of the human interviewer (see videos HH.no_interrupt.mp4 and HH.no_interrupt.mp4 for the human-human interaction that S1 observed; see HM.no_interrupt_mp4 and HM_interrupt_mp4 for other examples of the skills that S1 has acquired by observation). In the “interrupt” scenario S1 has learned to use interruption as a method to keep the interview from going over a pre-defined time limit. |

2021©K.R.Thórisson

/var/www/cadia.ru.is/wiki/data/pages/public/t-720-atai/atai-21/methodologies.txt · Last modified: 2024/04/29 13:33 by 127.0.0.1