Table of Contents

T-720-ATAI-2016

Lecture Notes, F-9 09.02.2016

Requirements for Evaluation: Features That Evaluators Should Be Able To Control

| Determinism | Both full determinism and partial stochasticity (for realism regarding, e.g. noise, stochastic events, etc.) must be supported. |

| Ergodicity | The reachability of (aspects of) states from others determines the degree to which the agent can undo things and get second chances. |

| Continuity | For evaluation to be relevant to e.g.robotics, it is critical to allow continuous variables, to appropriately represent continu- ous spatial and temporal features. The degree to which continuity is approximated (discretization granularity) should be changeable for any variable. |

| Asynchronicity | Any action in the task-environment, including sensors and controls, may operate on arbitrary time scales and interact at any time, letting an agent respond when it can. |

| Dynamism | A static task-environment’s state only changes in response to the AI’s actions. The most simplistic ones are step-lock, where the agent makes one move and the environment responds with another (e.g. board games). More complex environments can be dynamic to various degrees in terms of speed and magnitude, and may be caused by interactions between environmental factors, or simply due to the passage of time. |

| Observability | Task-environments can be partially observable to varying degrees, depending on the type, range, refresh rate, and precision of available sensors, affecting the difficulty and general nature of the task-environment. |

| Controllability | The control that the agent can exercise over the environ- ment to achieve its goals can be partial or full, depending on the capability, type, range, inherent latency, and precision of available actuators. |

| Multiple Parallel Causal Chains | Any generally intelligent system in a complex environment is likely to be trying to meet multiple objectives, that can be co-dependent in various ways through any number of causal chains in the task-environment. Actions, observations, and tasks may occur sequentially or in parallel (at the same time). Needed to implement real- world clock environments. |

| Periodicity | Many structures and events in nature are repetitive to some extent, and therefore contain a (learnable) periodic cycle – e.g. the day-night cycle or blocks of identical houses. |

| Repeatability | Both fully deterministic and partially stochastic environ- ments must be fully repeatable, for traceable transparency. |

| REF | Thorisson, Bieger, Schiffel & Garrett |

Requirements for Evaluation: Settings That Must Be Obtainable

| Complexity | ENVIRONMENT IS COMPLEX WITH DIVERSE INTERACTING OBJECTS |

| Dynamicity | ENVIRONMENT IS DYNAMIC |

| Regularity | TASK-RELEVANT REGULARITIES EXIST AT MULTIPLE TIME SCALES |

| Task Diversity | TASKS CAN BE COMPLEX, DIVERSE, AND NOVEL |

| Interactions | AGENT/ENVIRONMENT/TASK INTERACTIONS ARE COMPLEX AND LIMITED |

| Computational limitations | AGENT COMPUTATIONAL RESOURCES ARE LIMITED |

| Persistence | AGENT EXISTENCE IS LONG-TERM AND CONTINUAL |

| REF | Laird et al. |

Controllers & Agents

Centrifugal Governor

| What it is | A mechanical system for controlling power of a motor or engine. Centrifugal governors were used to regulate the distance and pressure between millstones in windmills in the 17th century. REF |

| Why it's important | Earliest example of automatic regulation with proportional feedback. |

| Modern equivalents | Servo motors (servos), PID control. |

| Curious feature | The signal represented and the control (Action) output uses the same mechanical system, fusing the information represented with the control mechanism. This is the least flexible way of implementing control. |

Generalization

Key Features of Feedback Control

| Sensor | A kind of transducer that changes one type of energy to another type. |

| Decider | A Decision Process is a function whose output is a commitment to a particular action. The computation of a decision may take a long time, and the implementation of an action committed to may take a long time. Therefore, the exact moment of commitment may not be a single infinitely small moment in time. However, it is often treated as such. |

| Actuator | A physical or virtual mechanism that implements an action that has been committed to. |

| Principle | Predefined causal connection between a measured variable <m>v</m> and a controllable variable <m>v_c</m> where <m>v = f(v_c)</m>. |

| Mechanical controller | Fuses control mechanism with measurement mechanism via mechanical coupling. Adaptation would require mechanical structure to change. Makes adaptation very difficult to implement. |

| Digital controllers | Separates the stages of measurement, analysis, and control. Makes adaptive control feasible. |

| Feedback | For a variable <m>v</m>, information of its value at time <m>t_1</m> is transmitted back to the controller through a feedback mechanism as <m>v{prime}</m>, where <m>v{prime}(t) > v(t)</m> that is, there is a latency in the transmission, which is a function of the speed of transmission (encoding (measurement) time + transmission time + decoding (read-back) time). |

| Latency | A measure for the size of the difference between <m>v</m> and <m>v{prime}</m>. |

| Jitter | The change in Latency over time. Second-order latency. |

Key Features of Feedforward (predicitive) Control

| Feedforward | Using prediction, the change of a control signal <m>v</m> can be done before perturbations of <m>v</m> happens, so that the output of the plant <m>o</m> stays constant. |

| What it requires | This requires information about the entity controlled in the form of a predictive model, and a second set of signals <m>p</m> that are antecedents of <m>o</m> and can thus be used to predict the behavior of <m>o</m>. |

| Signal behavior | When predicting a time-varying signal <m>v</m> the frequency of change, the possible patterns of change, and the magnitude of change of <m>v</m> are of key importance, as are these factors for the information used to predict its behavior <m>p</m>. |

| Learning predictive control | By deploying a learner capable of learning predictive control a more robust behavior can be achieved in the controller, even with low sampling rates. |

| Challenge | Unless we know beforehand which signals cause perturbations in <m>o</m> and can hard-wire these from the get-go in the controller, the controller must search for these signals. In task-domains where the number of available signals is vastly greater than the controller's search resources, it may take an unacceptable time for the controller to find good predictive variables. |

Intelligent Agents

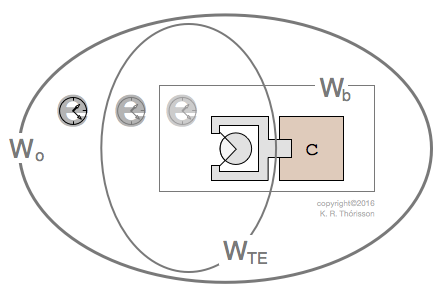

|

| A simple control pipeline consists of at least one sensor, at least one control process of some sort, and at least one end effector. a := afferent copy |

AGI Controller

| Nested feedforward + feedback control | For a general intelligence, neither feedback alone nor feed-forward alone, is sufficient. They must be used in combination. |

| Coordination | In natural intelligence, and the agents we consider, use of feedback and prediction is expected to be used within the same system, in coordination. This coordination must be present through the right architecture. |

| Cognitive Architecture | The collected and integrated operation of a set of processes intended for intelligent control capable of learning. |

| Natural intelligence | Evidence exists for integrated feedback/feedforward control in natural systems, in particular with respect to motor control. |

EOF